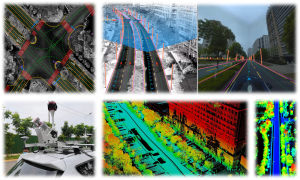

Our research group focuses on the core domains of Spatial Intelligent Perception and Autonomous Navigation, driven by the frontier of Geospatial Intelligence (GeoAI). We integrate cutting-edge technologies including LiDAR SLAM, photogrammetry, and multi-source information fusion, with a strategic emphasis on Geospatial Foundation Models.

To meet the operational requirements of mobile platforms such as UAVs and quadruped robots in complex scenarios, we focus on overcoming technical challenges in GPS-denied environments and sub-canopy spaces. We are particularly interested in leveraging large-scale pre-trained models to enhance sensor performance, achieve high-precision positioning, and enable robust autonomous obstacle avoidance and secure flight control under rigorous conditions.

By harnessing the power of Geospatial Big Models, we advance beyond traditional data processing to achieve multimodal spatial semantic understanding and spatio-temporal knowledge reasoning. Our research explores the zero-shot transfer capabilities of foundation models for automated feature recognition, complex scene classification, and the intelligent interpretation of spatial relationships.

By bridging Embodied AI with geographic priors, we have established a comprehensive “Perception – Decision – Control – Application” technology framework. This system provides highly efficient, reliable solutions for the low-altitude economy, under-canopy flight, road inspection, and mountainous operations